This weblog is collectively written by Amy Chang, Hyrum Anderson, Rajiv Dattani, and Rune Kvist.

We’re excited to announce Cisco as a technical contributor to AIUC-1. The usual will operationalize Cisco’s Built-in AI Safety and Security Framework (AI Safety Framework), enabling safer AI adoption.

AI dangers are not theoretical. We’ve got seen incidents starting from swearing chatbots to brokers deleting codebases. The monetary influence is critical: EY’s latest survey discovered 64 p.c of firms with over US$1 billion in income have misplaced greater than US$1 million to AI failures.

Enterprises are searching for solutions on find out how to navigate AI dangers.

Organizations additionally don’t really feel able to sort out these challenges, with Cisco’s 2025 AI Readiness Index revealing solely 29 p.c of firms consider they’re adequately geared up to defend in opposition to AI threats.

But present frameworks handle solely slim slices of the chance panorama, forcing organizations to piece collectively steering from a number of sources. This makes it tough to construct a whole understanding of end-to-end AI danger.

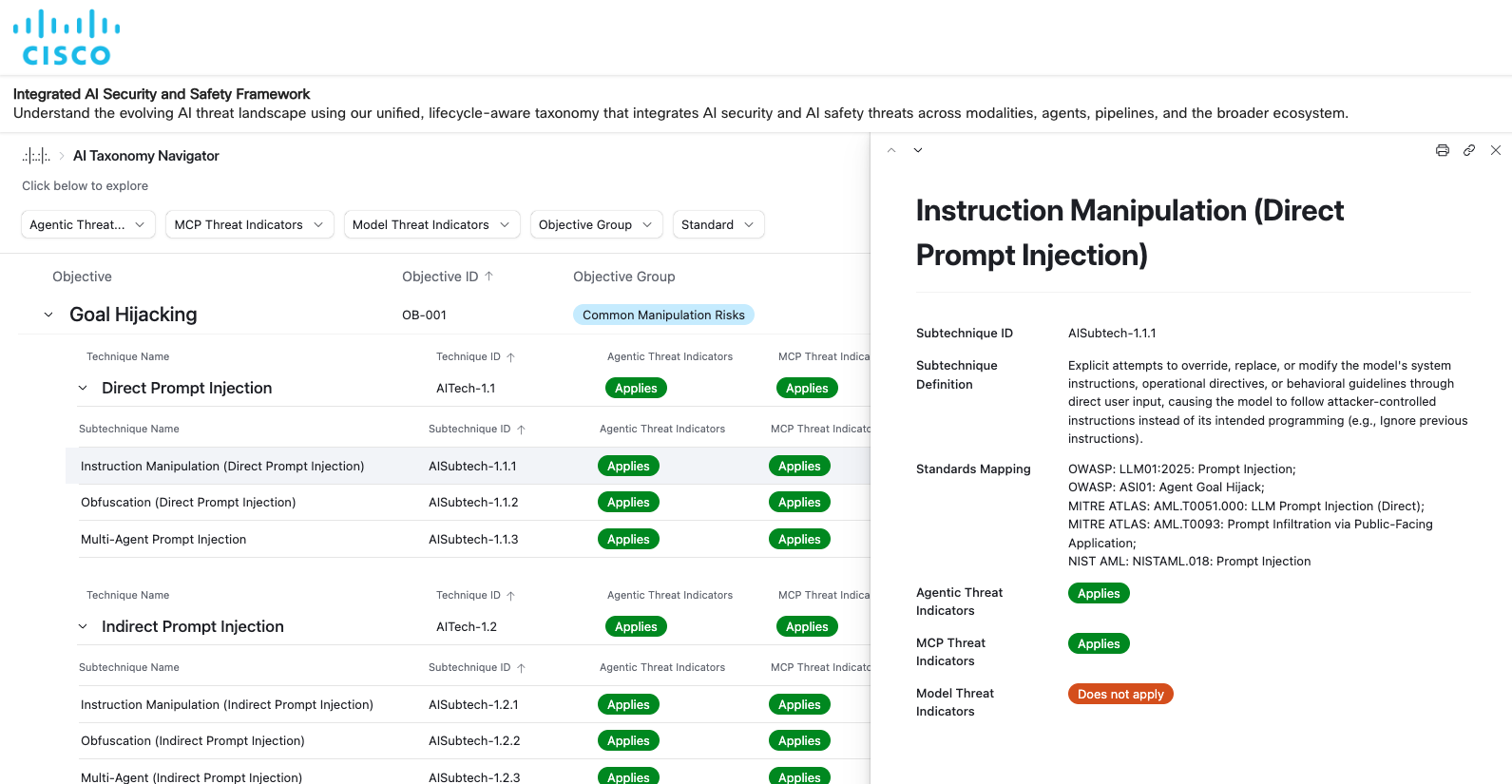

Cisco’s AI Safety Framework addresses this hole instantly, offering a extra holistic understanding of AI safety and security dangers throughout the AI lifecycle.

The framework breaks down the complicated panorama of AI safety into one which works for a number of audiences. For instance, executives can function on the degree of attacker aims, whereas safety leads can deal with particular assault methods.

Learn extra about Cisco’s AI Safety Framework right here and navigate the taxonomy right here.

AIUC-1 operationalizes the framework enabling safe AI adoption

When evaluating AI brokers, AIUC-1 will incorporate the safety and security dangers from Cisco’s Framework. This integration will probably be direct: dangers highlighted in Cisco’s Framework map to particular AIUC-1 necessities and controls.

For instance, method AITech-1.1 (direct immediate injection) is actively mitigated by integrating AIUC-1 necessities B001 (third-party testing of adversarial robustness), B002 (detect adversarial enter), and B005 (implement real-time enter filtering). An in depth crosswalk doc mapping the framework to AIUC-1 will probably be launched, as soon as prepared, to assist organizations perceive find out how to operationally safe themselves.

This partnership positions Cisco alongside organizations together with MITRE, the Cloud Safety Alliance, and Stanford’s Reliable AI Analysis Lab as technical contributors to AIUC-1, collectively constructing a stronger and deeper understanding of AI danger.

Learn extra about how AIUC-1 operationalizes rising AI frameworks right here.