By Sila Özeren Hacioglu, Safety Analysis Engineer at Picus Safety.

For safety leaders, essentially the most dreaded notification is not all the time an alert from their SOC; it’s a hyperlink to a information article despatched by a board member. The headline normally particulars a brand new marketing campaign by a risk group like FIN8 or a lately uncovered large provide chain vulnerability. The accompanying query is transient however paralyzing by implication: “Are we uncovered to this proper now?“.

Within the pre-LLM world, answering that query set off a mad race in opposition to an unforgiving clock. Safety groups needed to anticipate vendor SLAs, typically eight hours or extra for rising threats, or manually reverse-engineer the assault themselves to construct a simulation. Although this strategy delivered an correct response, the time it took to take action created a harmful window of uncertainty.

AI-driven risk emulation has eradicated a lot of the investigative delay by accelerating evaluation and increasing risk information. Nevertheless, AI emulation nonetheless carries dangers attributable to restricted transparency, susceptibility to manipulation, and hallucinations.

On the current BAS Summit, Picus CTO and Co-founder Volkan Ertürk cautioned that “uncooked generative AI can create exploit dangers practically as severe because the threats themselves.” Picus addresses this through the use of an agentic, post-LLM strategy that delivers AI-level velocity with out introducing new assault surfaces.

This weblog breaks down what that strategy appears like, and why it basically improves the velocity and security of risk validation.

The “Immediate-and-Pray” Entice

The speedy response to the Generative AI increase was an try and automate pink teaming by merely asking Massive Language Fashions (LLMs) to generate assault scripts. Theoretically, an engineer may feed a risk intelligence report right into a mannequin and ask it to “draft an emulation marketing campaign”.

Whereas this strategy is undeniably quick, it fails in reliability and security. As Picus’s Ertürk notes, there’s some hazard in taking this strategy:

“ … Are you able to belief a payload that’s constructed by an AI engine? I do not suppose so. Proper? Perhaps it simply got here up with the actual pattern that an APT group has been utilizing or a ransomware group has been utilizing. … you then click on that binary, and increase, you’ll have huge issues.”

The issue is just not solely dangerous binaries. As talked about above, LLMs are nonetheless vulnerable to hallucination. With out strict guardrails, a mannequin would possibly invent TTPs (Ways, Methods, and Procedures) that the risk group would not really use, or counsel exploits for vulnerabilities that do not exist. This leaves safety groups struggling to validate their defenses in opposition to theoretical threats whereas not taking the time to deal with precise ones.

To handle these points, the Picus platform adopts a basically totally different mannequin: the agentic strategy.

Cease counting on dangerous, hallucination-prone LLMs. Picus makes use of a multi-agent framework to map risk intelligence on to protected, validated simulations.

Shut the hole between a information alert and your protection readiness with the world’s first Agentic BAS platform.

The Agentic Shift: Orchestration Over Technology

The Picus strategy, embodied in Good Risk, strikes away from utilizing AI as a code generator and as a substitute makes use of it as an orchestrator of recognized, protected elements.

Relatively than asking AI to create payloads, the system instructs it to map threats to the trusted Picus Risk Library.

“So our strategy is … we have to leverage AI, however we have to use it in a wise method… We have to say that, hey, I’ve a risk library. Map the marketing campaign you constructed to my TTPs that I do know are top quality, low explosive, and simply give me an emulation plan primarily based on what I’ve and on my TTPs.” – Volkan Ertürk, CTO & co-founder of Picus Safety.

On the core of this mannequin is a risk library constructed and refined over 12 years of real-world risk Picus Labs risk analysis. As an alternative of producing malware from scratch, AI analyzes exterior intelligence and aligns it to a pre-validated information graph of protected atomic actions. This ensures accuracy, consistency, and security.

To execute this reliably, Picus makes use of a multi-agent framework reasonably than a single monolithic chatbot. Every agent has a devoted operate, stopping errors and avoiding scaling points:

-

Planner Agent: Orchestrates the general workflow

-

Researcher Agent: Scours the net for intelligence

-

Risk Builder Agent: Assembles the assault chain

-

Validation Agent: Checks the work of the opposite brokers to forestall hallucinations

Actual-Life Case Examine: Mapping the FIN8 Assault Marketing campaign

To indicate how the system works in observe, right here is the workflow the Picus platform follows when processing a request associated to the “FIN8” risk group. This instance illustrates how a single information hyperlink could be transformed right into a protected, correct emulation profile inside hours.

A walkthrough of the identical course of was demonstrated by Picus CTO Volkan Ertürk throughout the BAS Summit.

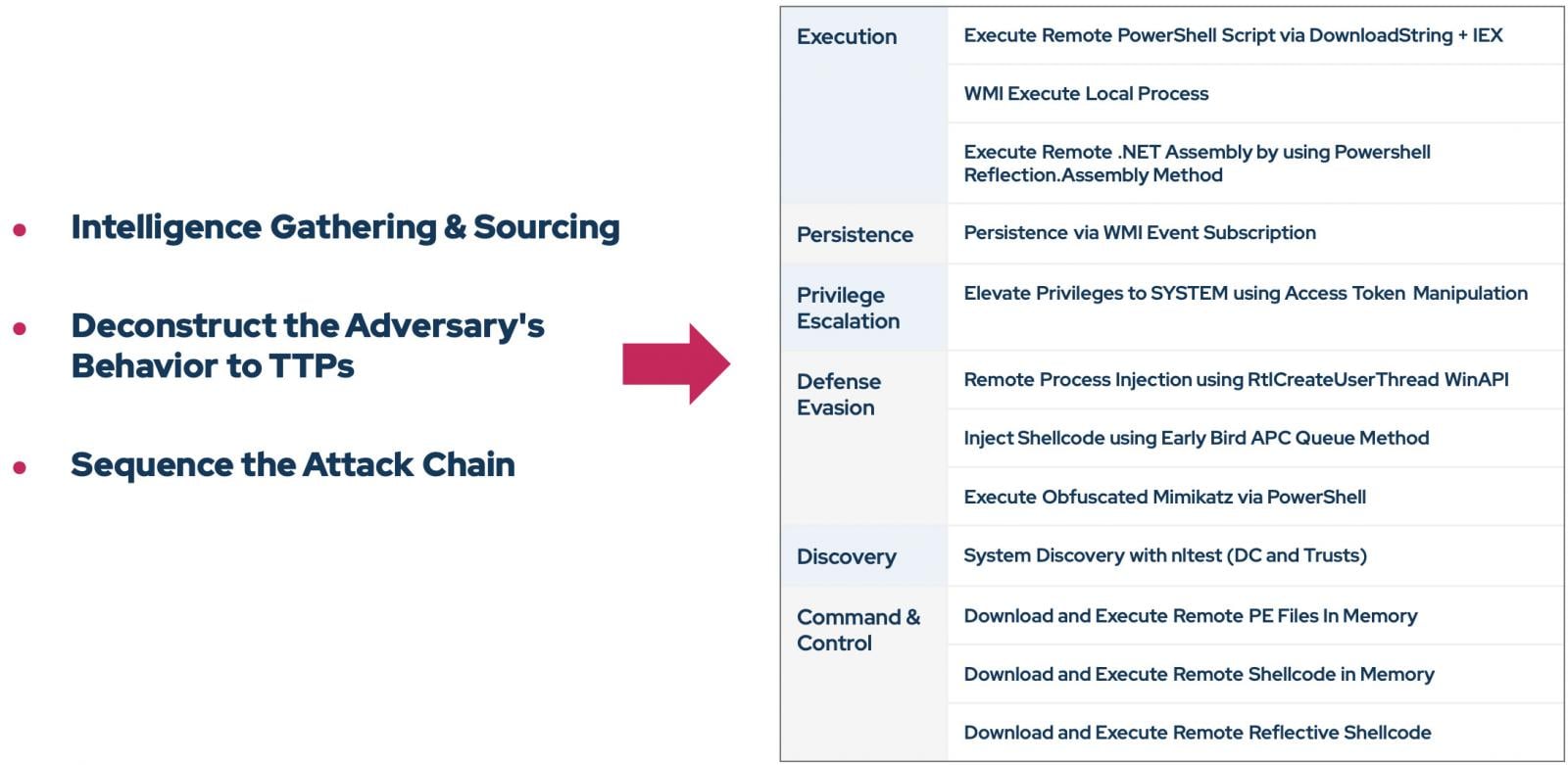

Step 1: Intelligence Gathering and Sourcing

The method begins with a consumer inputting a single URL, maybe a contemporary report on a FIN8 marketing campaign.

The Researcher Agent would not cease at that single supply. It crawls for related hyperlinks, validates the trustworthiness of these sources, and aggregates the info to construct a complete “completed intel report.”

Step 2: Deconstruction and Habits Evaluation

As soon as the intelligence is gathered, the system performs behavioral evaluation. It deconstructs the marketing campaign narrative into technical elements, figuring out the particular TTPs utilized by the adversary.

The objective right here is to know the stream of the whole assault, not simply its static indicators.

Step 3: Protected Mapping by way of Data Graph

That is the vital “security valve.”

The Risk Builder Agent takes the recognized TTPs and queries the Picus MCP (Mannequin Context Protocol) server. As a result of the risk library sits on a information graph, the AI can map the adversary’s malicious conduct to a corresponding protected simulation motion from the Picus library.

For instance, if FIN8 makes use of a selected technique for credential dumping, the AI selects the benign Picus module that exams for that particular weak spot with out really dumping any actual credentials.

Step 4: Sequencing and Validation

Lastly, the brokers sequence these actions into an assault chain that mirrors the adversary’s playbook. A Validation Agent opinions the mapping to make sure no steps have been hallucinated or potential errors have been launched.

The output is a ready-to-run simulation profile containing the precise MITRE ways and Picus actions wanted to check organizational readiness.

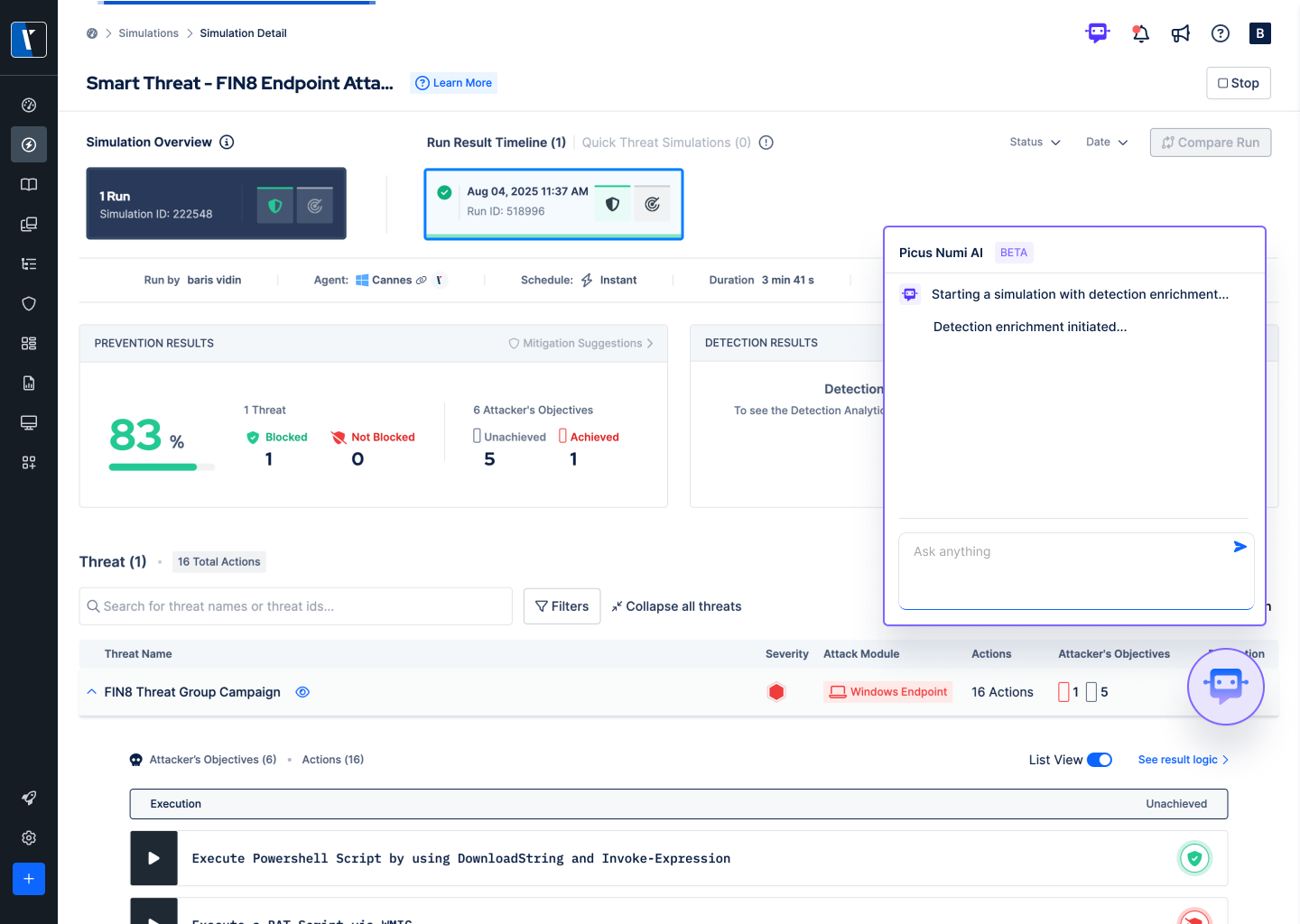

The Future: Conversational Publicity Administration

Past simply constructing threats, this agentic strategy is altering the interface of safety validation. Picus is integrating these capabilities right into a conversational interface referred to as “Numi AI.”

This strikes the consumer expertise from navigating advanced dashboards to easier, clearer, intent-based interactions.

For example, a safety engineer can categorical high-level intent, “I do not need any configuration threats“, and the AI displays the surroundings, alerting the consumer solely when related coverage adjustments or rising threats violate that particular intent.

This shift towards “context-driven safety validation” permits organizations to prioritize patching primarily based on what is really exploitable.

By combining AI-driven risk intelligence with supervised machine studying that predicts management effectiveness on non-agent units, groups can distinguish between theoretical vulnerabilities and true dangers to their particular group and environments.

In a panorama the place risk actors transfer quick, the power to show a headline right into a validated protection technique inside hours is now not a luxurious; it’s a necessity.

The Picus strategy means that one of the simplest ways to make use of AI is not to let it write malware, however to let it arrange the protection.

Shut the hole between your risk discovery and protection validation efforts.

Request a demo to see Picus’ agentic AI in motion, and discover ways to operationalize breaking risk intelligence earlier than it’s too late.

Word: This text was expertly written and contributed by Sila Ozeren Hacioglu, Safety Analysis Engineer at Picus Safety.

Sponsored and written by Picus Safety.