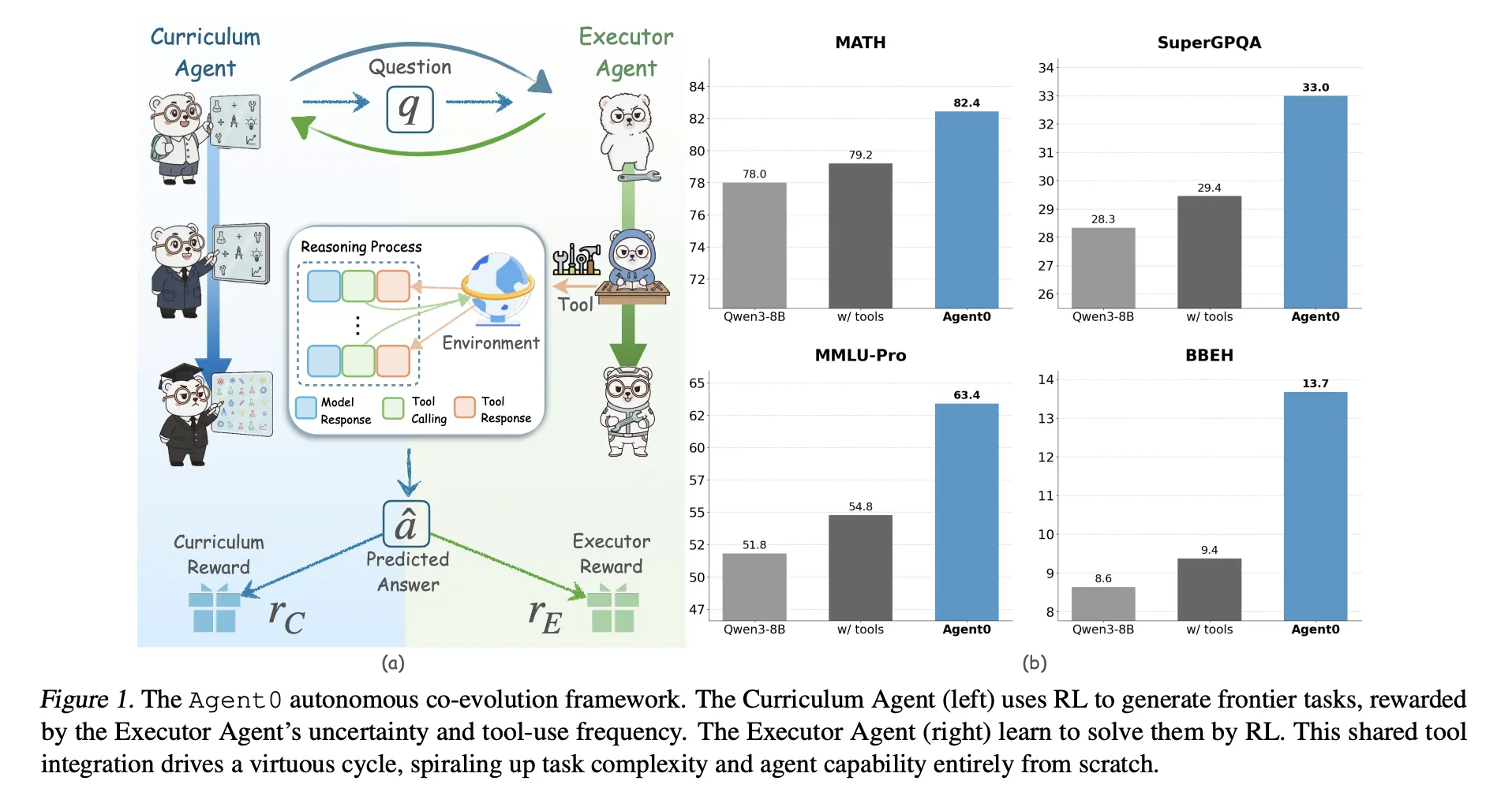

Giant language fashions want large human datasets, so what occurs if the mannequin should create all its personal curriculum and train itself to make use of instruments? A staff of researchers from UNC-Chapel Hill, Salesforce Analysis and Stanford College introduce ‘Agent0’, a completely autonomous framework that evolves high-performing brokers with out exterior knowledge via multi-step co-evolution and seamless instrument integration

Agent0 targets mathematical and normal reasoning. It reveals that cautious job technology and gear built-in rollouts can push a base mannequin past its unique capabilities, throughout ten benchmarks.

Two brokers from one base mannequin

Agent0 begins from a base coverage π_base, for instance Qwen3 4B Base or Qwen3 8B Base. It clones this coverage into:

- a Curriculum Agent πθ that generates duties,

- an Executor Agent πϕ that solves these duties with a Python instrument.

Coaching proceeds in iterations with two phases per iteration:

- Curriculum evolution: The curriculum agent generates a batch of duties. For every job, the executor samples a number of responses. A composite reward measures how unsure the executor is, how typically it makes use of the instrument and the way numerous the batch is. πθ is up to date with Group Relative Coverage Optimization (GRPO) utilizing this reward.

- Executor evolution: The educated curriculum agent is frozen. It generates a big pool of duties. Agent0 filters this pool to maintain solely duties close to the executor’s functionality frontier, then trains the executor on these duties utilizing an ambiguity conscious RL goal referred to as Ambiguity Dynamic Coverage Optimization (ADPO).

This loop creates a suggestions cycle. Because the executor turns into stronger through the use of the code interpreter, the curriculum should generate extra advanced, instrument reliant issues to maintain its reward excessive.

How the curriculum agent scores duties?

The curriculum reward combines three alerts:

Uncertainty reward: For every generated job x, the executor samples ok responses and majority votes a pseudo reply. Self consistency p̂(x) is the fraction of responses that agree with this majority. The reward is maximal when p̂ is near 0.5 and low when duties are too simple or too arduous. This encourages duties which might be difficult however nonetheless solvable for the present executor.

Device use reward: The executor can set off a sandboxed code interpreter utilizing python tags and receives outcomes tagged as output. Agent0 counts the variety of instrument calls in a trajectory and provides a scaled, capped reward, with a cap C set to 4 in experiments. This favors duties that really require instrument calls relatively than pure psychological arithmetic.

Repetition penalty: Inside every curriculum batch, Agent0 measures pairwise similarity between duties utilizing a BLEU based mostly distance. Duties are clustered, and a penalty time period will increase with cluster measurement. This discourages the curriculum from producing many close to duplicates.

A composite reward multiplies a format examine with a weighted sum of uncertainty and gear rewards minus the repetition penalty. This composite worth feeds into GRPO to replace πθ.

How the executor learns from noisy self labels?

The executor can also be educated with GRPO however on multi flip, instrument built-in trajectories and pseudo labels as a substitute of floor reality solutions.

Frontier dataset development: After curriculum coaching in an iteration, the frozen curriculum generates a big candidate pool. For every job, Agent0 computes self consistency p̂(x) with the present executor and retains solely duties the place p̂ lies in an informative band, for instance between 0.3 and 0.8. This defines a difficult frontier dataset that avoids trivial or unattainable issues.

Multi flip instrument built-in rollouts: For every frontier job, the executor generates a trajectory that may interleave:

- pure language reasoning tokens,

pythoncode segments,outputinstrument suggestions.

Technology pauses when a instrument name seems, executes the code in a sandboxed interpreter constructed on VeRL Device, then resumes conditioned on the end result. The trajectory terminates when the mannequin produces a closing reply inside {boxed ...} tags.

A majority vote throughout sampled trajectories defines a pseudo label and a terminal reward for every trajectory.

ADPO, ambiguity conscious RL: Normal GRPO treats all samples equally, which is unstable when labels come from majority voting on ambiguous duties. ADPO modifies GRPO in two methods utilizing p̂ as an ambiguity sign.

- It scales the normalized benefit with an element that will increase with self consistency, so trajectories from low confidence duties contribute much less.

- It units a dynamic higher clipping sure for the significance ratio, which is dependent upon self consistency. Empirical evaluation reveals that mounted higher clipping primarily impacts low chance tokens. ADPO relaxes this sure adaptively, which improves exploration on unsure duties, as visualized by the up clipped token chance statistics.

Outcomes on mathematical and normal reasoning

Agent0 is carried out on high of VeRL and evaluated on Qwen3 4B Base and Qwen3 8B Base. It makes use of a sandboxed Python interpreter as the only exterior instrument.

The analysis staff consider on ten benchmarks:

- Mathematical reasoning: AMC, Minerva, MATH, GSM8K, Olympiad Bench, AIME24, AIME25.

- Common reasoning: SuperGPQA, MMLU Professional, BBEH.

They report cross@1 for many datasets and imply@32 for AMC and AIME duties.

For Qwen3 8B Base, Agent0 reaches:

- math common 58.2 versus 49.2 for the bottom mannequin,

- general normal common 42.1 versus 34.5 for the bottom mannequin.

Agent0 additionally improves over sturdy knowledge free baselines corresponding to R Zero, Absolute Zero, SPIRAL and Socratic Zero, each with and with out instruments. On Qwen3 8B, it surpasses R Zero by 6.4 share factors and Absolute Zero by 10.6 factors on the general common. It additionally beats Socratic Zero, which depends on exterior OpenAI APIs.

Throughout three co evolution iterations, common math efficiency on Qwen3 8B will increase from 55.1 to 58.2 and normal reasoning additionally improves per iteration. This confirms steady self enchancment relatively than collapse.

Qualitative examples present that curriculum duties evolve from primary geometry inquiries to advanced constraint satisfaction issues, whereas executor trajectories combine reasoning textual content with Python calls to achieve right solutions.

Key Takeaways

- Absolutely knowledge free co evolution: Agent0 eliminates exterior datasets and human annotations. Two brokers, a curriculum agent and an executor agent, are initialized from the identical base LLM and co evolve solely through reinforcement studying and a Python instrument.

- Frontier curriculum from self uncertainty: The curriculum agent makes use of the executor’s self consistency and gear utilization to attain duties. It learns to generate frontier duties which might be neither trivial nor unattainable, and that explicitly require instrument built-in reasoning.

- ADPO stabilizes RL with pseudo labels: The executor is educated with Ambiguity Dynamic Coverage Optimization. ADPO down weights extremely ambiguous duties and adapts the clipping vary based mostly on self consistency, which makes GRPO type updates steady when rewards come from majority vote pseudo labels.

- Constant features on math and normal reasoning: On Qwen3 8B Base, Agent0 improves math benchmarks from 49.2 to 58.2 common and normal reasoning from 34.5 to 42.1, which corresponds to relative features of about 18 p.c and 24 p.c.

- Outperforms prior zero knowledge frameworks: Throughout ten benchmarks, Agent0 surpasses earlier self evolving strategies corresponding to R Zero, Absolute Zero, SPIRAL and Socratic Zero, together with people who already use instruments or exterior APIs. This reveals that the co evolution plus instrument integration design is a significant step past earlier single spherical self play approaches.

Editorial Notes

Agent0 is a crucial step towards sensible, knowledge free reinforcement studying for instrument built-in reasoning. It reveals {that a} base LLM can act as each Curriculum Agent and Executor Agent, and that GRPO with ADPO and VeRL Device can drive steady enchancment from majority vote pseudo labels. The tactic additionally demonstrates that instrument built-in co evolution can outperform prior zero knowledge frameworks corresponding to R Zero and Absolute Zero on sturdy Qwen3 baselines. Agent0 makes a powerful case that self evolving, instrument built-in LLM brokers have gotten a practical coaching paradigm.

Take a look at the PAPER and Repo. Be at liberty to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be happy to observe us on Twitter and don’t overlook to hitch our 100k+ ML SubReddit and Subscribe to our Publication. Wait! are you on telegram? now you’ll be able to be a part of us on telegram as nicely.