Picture by Creator

# Introduction

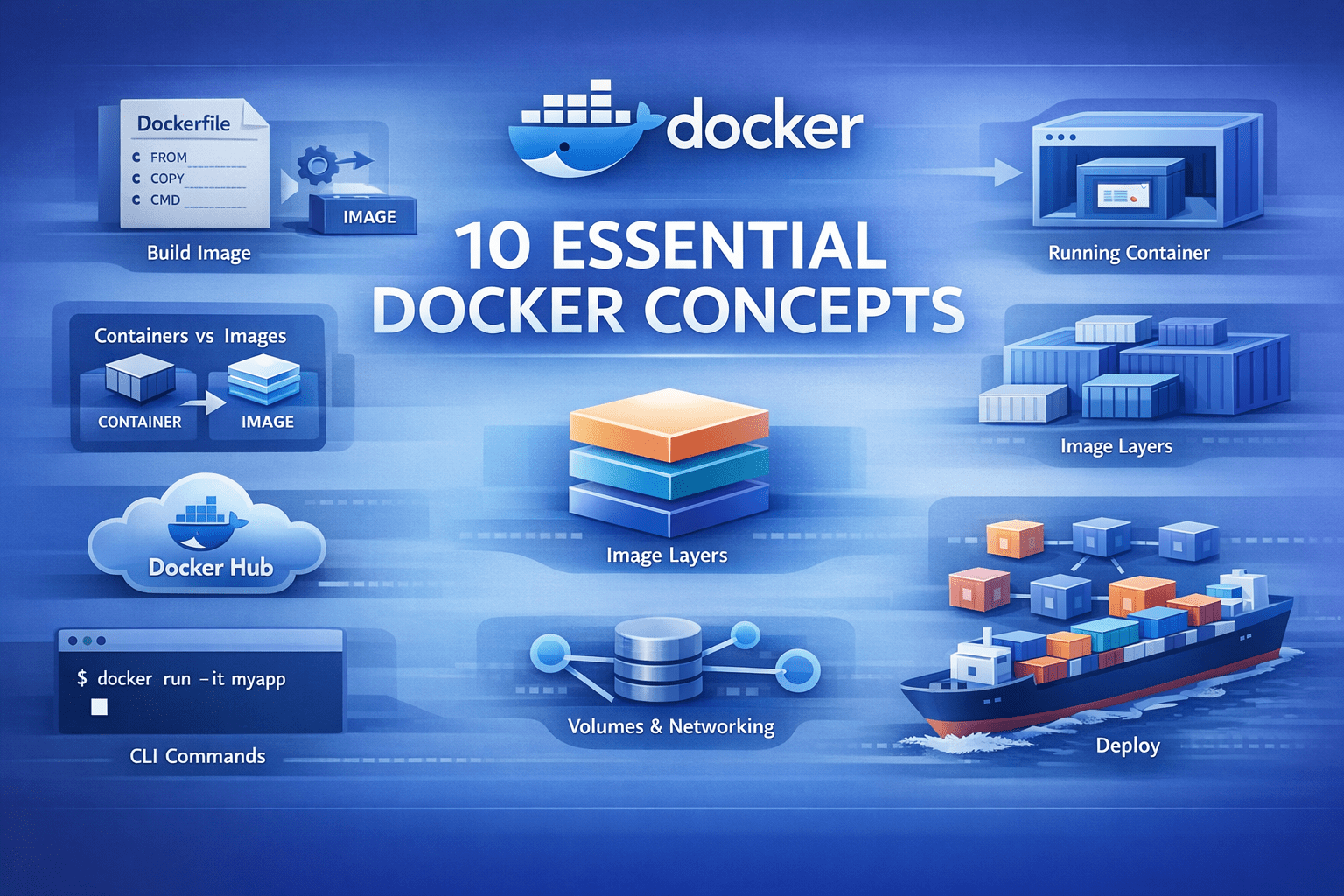

Docker has simplified how we construct and deploy functions. However if you end up getting began studying Docker, the terminology can typically be complicated. You’ll doubtless hear phrases like “photographs,” “containers,” and “volumes” with out actually understanding how they match collectively. This text will enable you perceive the core Docker ideas you’ll want to know.

Let’s get began.

# 1. Docker Picture

A Docker picture is an artifact that accommodates all the things your software must run: the code, runtime, libraries, surroundings variables, and configuration information.

Photos are immutable. When you create a picture, it doesn’t change. This ensures your software runs the identical means in your laptop computer, your coworker’s machine, and in manufacturing, eliminating environment-specific bugs.

Right here is the way you construct a picture from a Dockerfile. A Dockerfile is a recipe that defines the way you construct the picture:

docker construct -t my-python-app:1.0 .

The -t flag tags your picture with a reputation and model. The . tells Docker to search for a Dockerfile within the present listing. As soon as constructed, this picture turns into a reusable template to your software.

# 2. Docker Container

A container is what you get once you run a picture. It’s an remoted surroundings the place your software really executes.

docker run -d -p 8000:8000 my-python-app:1.0

The -d flag runs the container within the background. The -p 8000:8000 maps port 8000 in your host to port 8000 within the container, making your app accessible at localhost:8000.

You’ll be able to run a number of containers from the identical picture. They function independently. That is the way you check completely different variations concurrently or scale horizontally by operating ten copies of the identical software.

Containers are light-weight. In contrast to digital machines, they don’t boot a full working system. They begin in seconds and share the host’s kernel.

# 3. Dockerfile

A Dockerfile accommodates directions for constructing a picture. It’s a textual content file that tells Docker precisely how one can arrange your software surroundings.

Here’s a Dockerfile for a Flask software:

FROM python:3.11-slim

WORKDIR /app

COPY necessities.txt .

RUN pip set up --no-cache-dir -r necessities.txt

COPY . .

EXPOSE 8000

CMD ["python", "app.py"]

Let’s break down every instruction:

FROM python:3.11-slim— Begin with a base picture that has Python 3.11 put in. The slim variant is smaller than the usual picture.WORKDIR /app— Set the working listing to /app. All subsequent instructions run from right here.COPY necessities.txt .— Copy simply the necessities file first, not all of your code but.RUN pip set up --no-cache-dir -r necessities.txt— Set up Python dependencies. The –no-cache-dir flag retains the picture dimension smaller.COPY . .— Now copy the remainder of your software code.EXPOSE 8000— Doc that the app makes use of port 8000.CMD ["python", "app.py"]— Outline the command to run when the container begins.

The order of those directions is necessary for the way lengthy your builds take, which is why we have to perceive layers.

# 4. Picture Layers

Each instruction in a Dockerfile creates a brand new layer. These layers stack on high of one another to kind the ultimate picture.

Docker caches every layer. While you rebuild a picture, Docker checks if every layer must be recreated. If nothing modified, it reuses the cached layer as a substitute of rebuilding.

For this reason we copy necessities.txt earlier than copying your entire software. Your dependencies change much less often than your code. While you modify app.py, Docker reuses the cached layer that put in dependencies and solely rebuilds layers after the code copy.

Right here is the layer construction from our Dockerfile:

- Base Python picture (

FROM) - Set working listing (

WORKDIR) - Copy

necessities.txt(COPY) - Set up dependencies (

RUN pip set up) - Copy software code (

COPY) - Metadata about port (

EXPOSE) - Default command (

CMD)

If you happen to solely change your Python code, Docker rebuilds solely layers 5–7. Layers 1–4 come from cache, making builds a lot quicker. Understanding layers helps you write environment friendly Dockerfiles. Put frequently-changing information on the finish and steady dependencies at first.

# 5. Docker Volumes

Containers are momentary. While you delete a container, all the things inside disappears, together with information your software created.

Docker volumes clear up this drawback. They’re directories that exist exterior the container filesystem and persist after the container is eliminated.

docker run -d

-v postgres-data:/var/lib/postgresql/information

postgres:15

This creates a named quantity known as postgres-data and mounts it at /var/lib/postgresql/information contained in the container. Your database information survive container restarts and deletions.

You can too mount directories out of your host machine, which is helpful throughout improvement:

docker run -d

-v $(pwd):/app

-p 8000:8000

my-python-app:1.0

This mounts your present listing into the container at /app. Modifications you make to information in your host seem instantly within the container, enabling dwell improvement with out rebuilding the picture.

There are three kinds of mounts:

- Named volumes (

postgres-data:/path) — Managed by Docker, greatest for manufacturing information - Bind mounts (

/host/path:/container/path) — Mount any host listing, good for improvement - tmpfs mounts — Retailer information in reminiscence solely, helpful for momentary information

# 6. Docker Hub

Docker Hub is a public registry the place folks share Docker photographs. While you write FROM python:3.11-slim, Docker pulls that picture from Docker Hub.

You’ll be able to seek for photographs:

And pull them to your machine:

docker pull redis:7-alpine

You can too push your individual photographs to share with others or deploy to servers:

docker tag my-python-app:1.0 username/my-python-app:1.0

docker push username/my-python-app:1.0

Docker Hub hosts official photographs for common software program like PostgreSQL, Redis, Nginx, Python, and 1000’s extra. These are maintained by the software program creators and observe greatest practices.

For personal tasks, you may create personal repositories on Docker Hub or use various registries like Amazon Elastic Container Registry (ECR), Google Container Registry (GCR), or Azure Container Registry (ACR).

# 7. Docker Compose

Actual functions want a number of companies. A typical net app has a Python backend, a PostgreSQL database, a Redis cache, and perhaps a employee course of.

Docker Compose permits you to outline all these companies in a single But One other Markup Language (YAML) file and handle them collectively.

Create a docker-compose.yml file:

model: '3.8'

companies:

net:

construct: .

ports:

- "8000:8000"

surroundings:

- DATABASE_URL=postgresql://postgres:secret@db:5432/myapp

- REDIS_URL=redis://cache:6379

depends_on:

- db

- cache

volumes:

- .:/app

db:

picture: postgres:15-alpine

volumes:

- postgres-data:/var/lib/postgresql/information

surroundings:

- POSTGRES_PASSWORD=secret

- POSTGRES_DB=myapp

cache:

picture: redis:7-alpine

volumes:

postgres-data:

Now begin your complete software stack with one command:

This begins three containers: net, db, and cache. Docker Compose handles networking routinely: the net service can attain the database at hostname db and Redis at hostname cache.

To cease all the things, run:

To rebuild after code modifications:

docker-compose up -d --build

Docker Compose is crucial for improvement environments. As a substitute of putting in PostgreSQL and Redis in your machine, you run them in containers with one command.

# 8. Container Networks

While you run a number of containers, they should discuss to one another. Docker creates digital networks that join containers.

By default, Docker Compose creates a community for all companies outlined in your docker-compose.yml. Containers use service names as hostnames. In our instance, the net container connects to PostgreSQL utilizing db:5432 as a result of db is the service title.

You can too create customized networks manually:

docker community create my-app-network

docker run -d --network my-app-network --name api my-python-app:1.0

docker run -d --network my-app-network --name cache redis:7

Now the api container can attain Redis at cache:6379. Docker offers a number of community drivers, of which you’ll use the next typically:

- bridge — Default community for containers on a single host

- host — Container makes use of the host’s community instantly (no isolation)

- none — Container has no community entry

Networks present isolation. Containers on completely different networks can not talk except explicitly related. That is helpful for safety as you may separate your frontend, backend, and database networks.

To see all networks, run:

To examine a community and see which containers are related, run:

docker community examine my-app-network

# 9. Setting Variables and Docker Secrets and techniques

Hardcoding configuration is asking for bother. Your database password shouldn’t be the identical in improvement and manufacturing. Your API keys undoubtedly mustn’t dwell in your codebase.

Docker handles this by surroundings variables. Go them in at runtime with the -e or --env flag, and your container will get the config it wants with out baking values into the picture.

Docker Compose makes this cleaner. Level to an .env file and hold your secrets and techniques out of model management. Swap in .env.manufacturing once you deploy, or outline surroundings variables instantly in your compose file if they don’t seem to be delicate.

Docker Secrets and techniques take this additional for manufacturing environments, particularly in Swarm mode. As a substitute of surroundings variables — which can present up in logs or course of listings — secrets and techniques are encrypted throughout transit and at relaxation, then mounted as information within the container. Solely companies that want them get entry. They’re designed for passwords, tokens, certificates, and anything that may be catastrophic if leaked.

The sample is easy: separate code from configuration. Use surroundings variables for traditional config and secrets and techniques for delicate information.

# 10. Container Registry

Docker Hub works effective for public photographs, however you do not need your organization’s software photographs publicly accessible. A container registry is personal storage to your Docker photographs. Well-liked choices embody:

For every of the above choices, you may observe the same process to publish, pull, and use photographs. For instance, you’ll do the next with ECR.

Your native machine or steady integration and steady deployment (CI/CD) system first proves its identification to ECR. This permits Docker to securely work together along with your personal picture registry as a substitute of a public one. The domestically constructed Docker picture is given a totally certified title that features:

- The AWS account registry tackle

- The repository title

- The picture model

This step tells Docker the place the picture will dwell in ECR. The picture is then uploaded to the personal ECR repository. As soon as pushed, the picture is centrally saved, versioned, and accessible to licensed techniques.

Manufacturing servers authenticate with ECR and obtain the picture from the personal registry. This retains your deployment pipeline quick and safe. As a substitute of constructing photographs on manufacturing servers (sluggish and requires supply code entry), you construct as soon as, push to the registry, and pull on all servers.

Many CI/CD techniques combine with container registries. Your GitHub Actions workflow builds the picture, pushes it to ECR, and your Kubernetes cluster pulls it routinely.

# Wrapping Up

These ten ideas kind Docker’s basis. Right here is how they join in a typical workflow:

- Write a Dockerfile with directions to your app, and construct a picture from the Dockerfile

- Run a container from the picture

- Use volumes to persist information

- Set surroundings variables and secrets and techniques for configuration and delicate information

- Create a

docker-compose.ymlfor multi-service apps and let Docker networks join your containers - Push your picture to a registry, pull and run it wherever

Begin by containerizing a easy Python script. Add dependencies with a necessities.txt file. Then introduce a database utilizing Docker Compose. Every step builds on the earlier ideas. Docker just isn’t sophisticated when you perceive these fundamentals. It’s only a software that packages functions constantly and runs them in remoted environments.

Joyful exploring!

Bala Priya C is a developer and technical author from India. She likes working on the intersection of math, programming, information science, and content material creation. Her areas of curiosity and experience embody DevOps, information science, and pure language processing. She enjoys studying, writing, coding, and low! Presently, she’s engaged on studying and sharing her information with the developer group by authoring tutorials, how-to guides, opinion items, and extra. Bala additionally creates partaking useful resource overviews and coding tutorials.